Installation

Prerequisites

Open Content Platform (OCP) has stack dependencies on Python 3.x, Python libraries, Kafka, and Postgres.

So the first step is to install and configure those dependencies:

Kafka: increase the max message size, enable automatic creation of topics, and increase the partition count. Sample numbers follow for ./kafka/config/server.properties:

fetch.message.max.bytes=15728640

num.partitions=6

auto.create.topics.enable=true

And for ./kafka/config/consumer.properties:

fetch.message.max.bytes=15728640

Note: To simplify the setup for a Proof of Concept (POC), you can put everything in the same runtime (single server/VM/container/etc), using localhost, without redundancy or TLS certs. We may create a container like this in the future, to reduce the effort of newcomers taking OCP for a test drive.

Installing OCP

Examples below are illustrated via PowerShell commands on a Windows server:

- Download OCP from GitHub

- Drop in a local directory (e.g. D:\Software\openContentPlatform)

- Upgrade the python pip installer:

> python -m pip install ‑‑upgrade pip

- Install dependent Python modules, using the packaged requirements file:

> pip install -r D:\Software\openContentPlatform\conf\requirements.txt ‑‑user

Note: You need to resolve any errors here. For example, if wheel files aren’t available for all required dependencies, the installer will attempt to build them locally (meaning you need developer tools installed). If there are conflicts, you can either jump into the weeds and fix them, or take a Python step back.

As an example, as of June 2020, you couldn’t use Python 3.8+ on Windows since confluent-kafka wheels were unavailable (https://github.com/confluentinc/confluent-kafka-python/issues/753). But it worked if you dropped back and installed the latest 3.7 version (3.7.7). This particular issue is fixed in confluent-kafka-python v1.6.0.

- Configure this to run in your stack:

> python D:\Software\openContentPlatform\framework\lib\platformConfig.py

Note: Your base API user and key should be given all access levels (write/delete/admin). Make note of the user/key to use later.

Helpful advice: If this is a proof of concept and you’re planning to just use the loopback without setting up certs, we suggest explicitly using ‘127.0.0.1’ instead of ‘localhost’. Otherwise the requests library may have to wait up to 2 seconds before starting any request, due to bad routing, local configurations, or delays with IPv6 preference over IPv4.

Clone the directory for a few clients:

-

> md “D:\Software\ocpClient1”

> md “D:\Software\ocpClient2”

> md “D:\Software\ocpClient3”

> Copy-Item -Path “D:\Software\openContentPlatform*” -Destination “D:\Software\ocpClient1” -recurse

> Copy-Item -Path “D:\Software\openContentPlatform*” -Destination “D:\Software\ocpClient2” -recurse

> Copy-Item -Path “D:\Software\openContentPlatform*” -Destination “D:\Software\ocpClient3” -recurse

Start the OCP server from the command line (you can also use a service/daemon):

-

> python D:\Software\openContentPlatform\framework\openContentPlatform.py

Note: On windows you may need to start the platform and clients from an account with administrative privileges. You can right click on the shortcut (powershell, pwsh, cmd.exe – whatever) and “Run as Administrator”, to avoid the job based services from exiting shortly after starting. Otherwise, the psutil library may throw an exception while attempting to track the health of the runtime process. A corresponding service log (.\runtime\log\service\) will indicate this with a line like: “AccessDenied errors on Windows usually mean the main process was not started as Administrator.”.

Start some clients:

-

> python D:\Software\ocpClient1\framework\openContentClient.py resultProcessing

> python D:\Software\ocpClient2\framework\openContentClient.py contentGathering

> python D:\Software\ocpClient3\framework\openContentClient.py universalJob

To confirm it’s up and running, you can use the REST API or walk through the runtime\logs for the server and clients.

To test via the API, take your REST utility of choice (e.g. Insomnia) and add the apiUser and apiKey into the header section (generated previously by running platformConfig). Then issue a GET on one of the API resources. If you configured the platform to use http, localhost, and haven’t changed the port or context root… to see connected clients, you can issue a GET on http://localhost:52707/ocp/config/client. If it shows the clients running on ‘MyDesktop’, then you can request runtime details from the active clients, one level deeper with a GET on http://localhost:52707/ocp/config/client/MyDesktop.

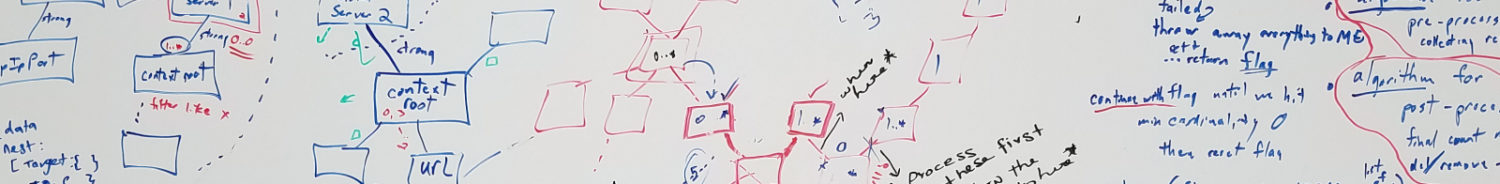

Infrastructure Footprint

Some points of consideration for the infrastructure footprint follow. Since this is intended as an enterprise product, production deployments would obviously have a larger footprint as you plan for resilience.

For the stack dependencies, you would want multiple Kafka instances. The same goes for ZooKeeper, used by Kafka. For the database, you can accomplish this multiple ways (3rd-party or database-specific clustering, active syncs, virtualization-level mechanisms, storage-level mechanisms).

For OCP, you would have a single server running at any time that you can monitor for problems (API interaction, log monitors, process monitors), and have it fail-over if the active service encounters a problem. This is a classic DR scenario. Regarding the clients, any number can run, of any type… spun up/down at any point in time. They are built to horizontally scale on demand. If you need more performance/capacity/throughput on a certain client type, spin another one up. It will subscribe to the appropriate service and start the work.

The clients would normally run in separate runtime environments than the server. You can have tooling automate the spin up and down of new clients. To enable that, copy/clone the directory structure for an “image” to deploy/execute on demand. Multiple clients (of the same type or different), may be spun up inside the same execution environment – as long as they are deployed in their own sub-directory. This avoids file conflicts, mainly from logging.